Summary

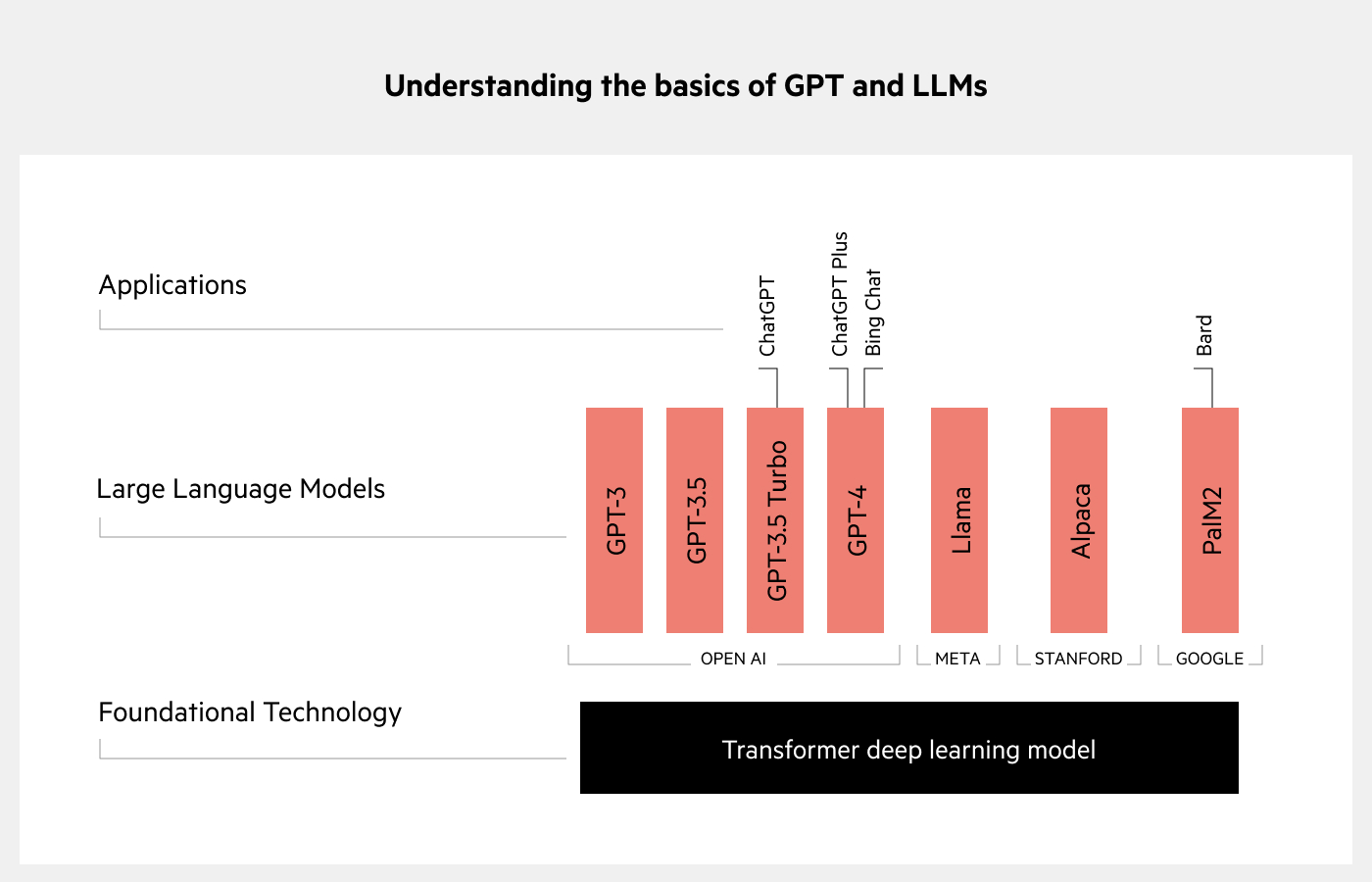

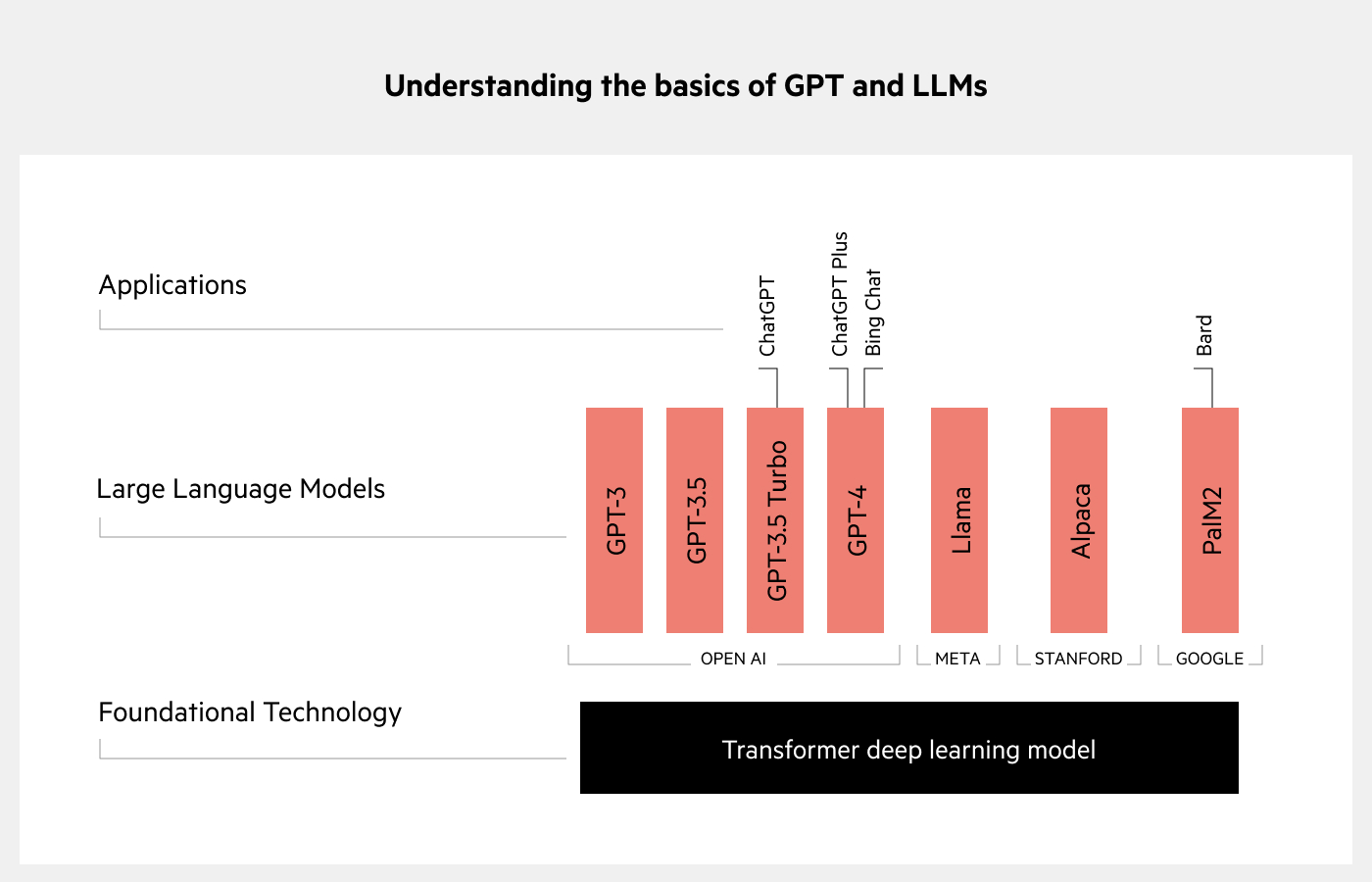

- ChatGPT and GPT are not the same thing: GPT is the foundational technology that powers ChatGPT.

- GPT is an AI large language model (LLM), developed by OpenAI.

- GPT-3, -3.5, -3.5 Turbo, and -4 are different models of GPT technology.

- The free version of ChatGPT is powered by GPT-3.5 Turbo, whereas ChatGPT Plus gives you the option to use GPT-4, which is its most advanced model so far.

- Microsoft Bing is also powered by GPT-4, while Bard (Google’s version of ChatGPT) is powered by its own LLM (PaLM2).

- Other LLMs, like LLaMA and Alpaca, are smaller, more efficient (but less sophisticated) LLMs.

What’s more confusing: constantly changing tax laws or understanding generative AI?

Great question. Both?

As an accounting professional, chances are that you’re more stumped by the latter than the former.

So, here is your high-level guide to understanding ChatGPT, generative AI, large language models (LLMs) and how they all relate to each other.

Understanding the foundations: large language models (LLMs)

A large language model (LLM) is a trained deep-learning artificial intelligence model that understands large data sets and generates text in a way that a human does.

Despite many LLMs existing, GPT is by far the most high-profile.

Open AI’s ChatGPT is powered by one of those GPT LLMs (more on this later).

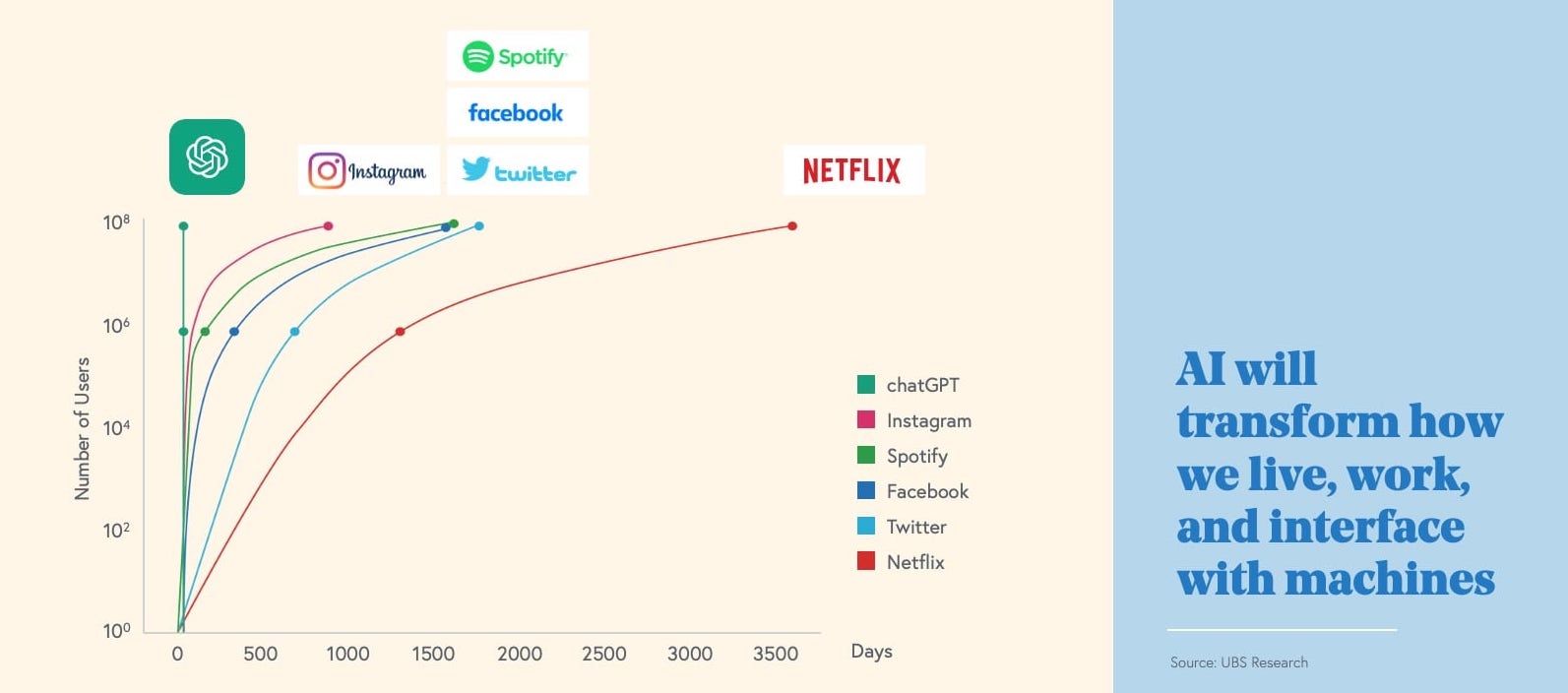

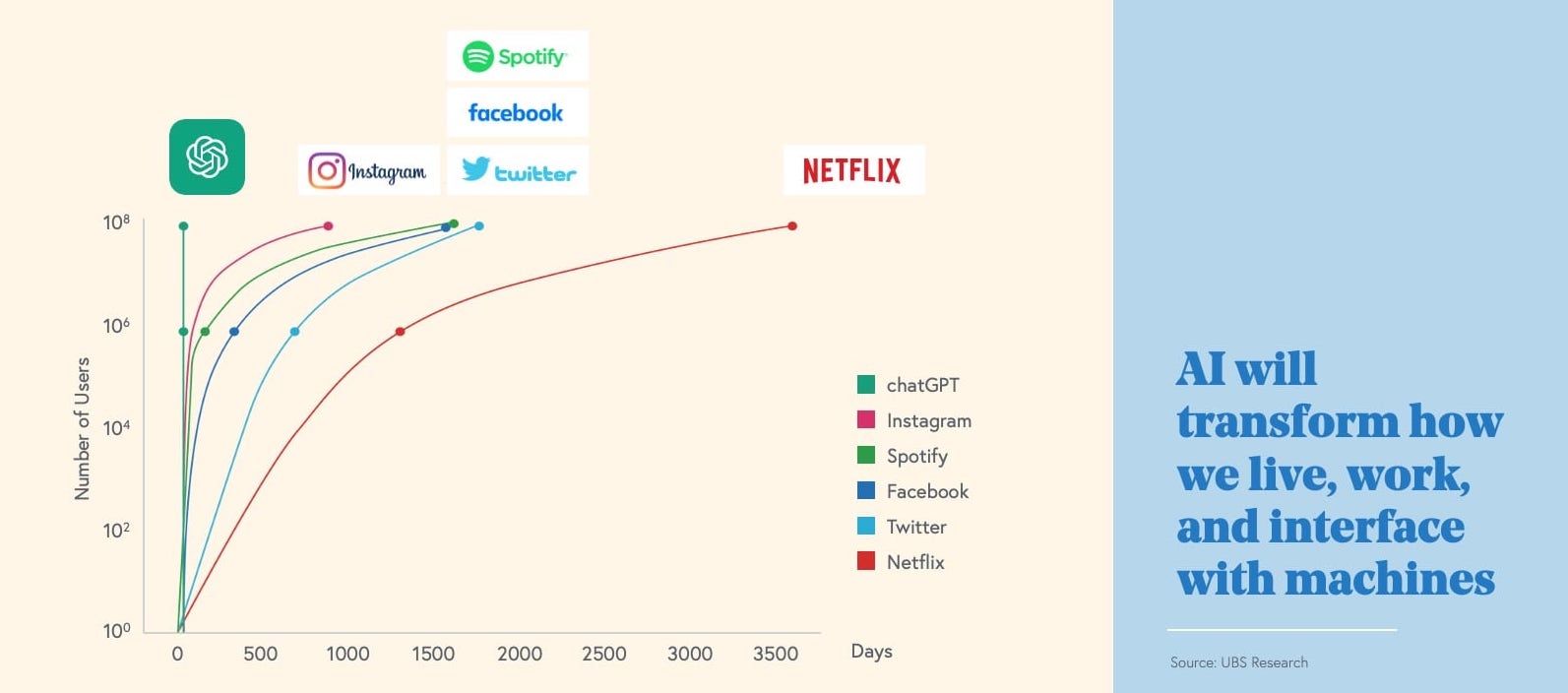

ChatGPT’s rise in popularity has been record-breaking: until the launch of Meta’s Threads app, ChatGPT was the fastest growing app in history, reaching 100 million monthly active users in just 2 months.

OpenAI's ChatGPT is the second-fastest growing app in history, reaching 100 million monthly active users in just 2 months.

Source: Bessemer Venture Partners

In fact, it’s practically become a generic trademark—the Kleenex or Band-Aid of generative AI.

What’s the difference between ChatGPT and GPT?

There’s some confusion surrounding the use of ‘ChatGPT’ and ‘GPT’. While many use these terms interchangeably, they’re not the same.

GPT stands for ‘Generative Pre-trained Transformer’ and it’s the actual technology. It’s the LLM that powers AI apps and gives them the ability to generate text, and was developed by OpenAI.

ChatGPT is a specific application, also developed by OpenAI, to deliver GPT-generated information to users via a chatbot.

Here’s a useful analogy: Think of ChatGPT as a Dell computer, and GPT as the Intel processor that powers it. Different computers run on Intel processors, just like many AI apps run on GPT-3 and GPT-4.

For example, Microsoft’s Bing Chat is powered by GPT-4.

In other words, GPT is the brain and ChatGPT is the mouth.

A high-level overview of how GPT, ChatGPT, LLMs relate to each other

What’s the difference between GPT-3, GPT-3.5 and GPT-4?

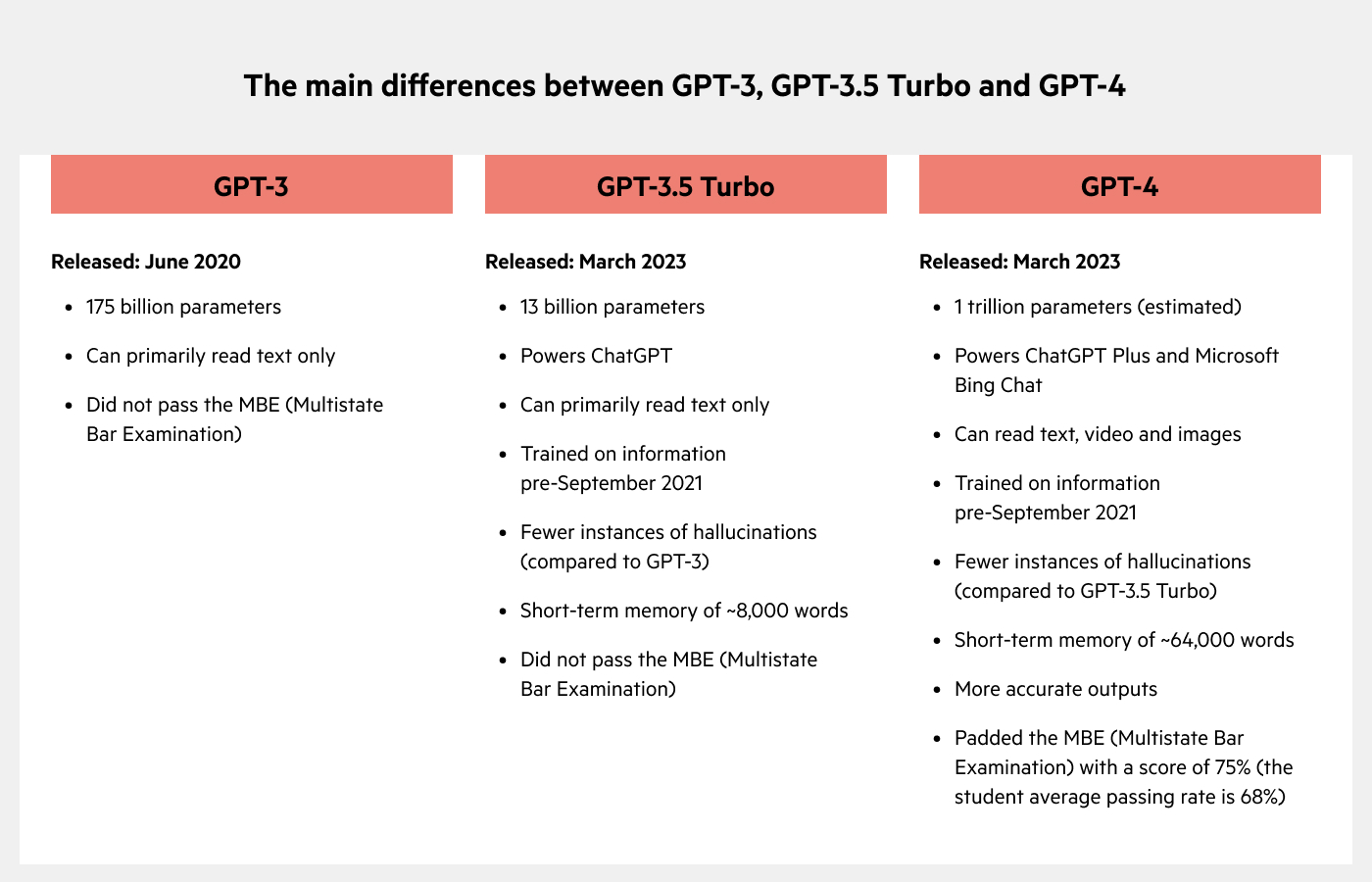

The main differences between GPT-3, GPT-3.5 Turbo and GPT-4

GPT-1, GPT-2 and GPT-3

GPT-1

Released in 2018, GPT-1 was OpenAI’s first large language model. It was trained on a dataset of approximately 8 million web pages and had 117 million parameters, which are essentially the number of variables the model uses to generate sophisticated responses.

Despite its limitations (such as generating repetitive text), GPT-1 was a significant milestone for OpenAI.

GPT-2

In 2019, OpenAI released GPT-2— a more powerful version of GPT-1, with 1.5 billion parameters and trained on a much larger dataset.

GPT-2 could generate human-like responses, but struggled with more complex understandings of reasoning and context.

GPT-3

In 2020, OpenAI released GPT-3. It’s significantly more powerful than GPT-2 with 175 billion parameters. In fact, GPT-3 is over 100 times larger than GPT-1 and over ten times larger than GPT-2.

Unlike GPT-1 and -2, GPT-3 understands context. This has powerful implications for chatbots and content creation. However, because GPT-3 was trained on large and varied amounts of data that can contain biased and inaccurate information, it can also produce biased and inaccurate responses.

GPT-3.5 and GPT-3.5 Turbo

GPT-3.5

GPT-3.5 powered the initial version of ChatGPT that took the world by storm, impressing users with its ability to generate human-sounding text and engage in coherent conversations.

Even though it has come after GPT-3, some argue it’s actually slightly inferior. This is because, like any technology, it has to straddle the fence of size and efficiency. Although faster, GPT-3.5 is not necessarily as knowledgeable because it has fewer parameters (1.3 billion vs. GPT-3’s 175 billion).

GPT-3.5 was designed to make AI systems more natural and safe to interact with. This is achieved by using Reinforcement Learning from Human Preferences (RLHF), which means that human feedback is used to improve machine-learning algorithms.

GPT-3.5 Turbo

Released in March 2023, GPT-3.5 Turbo is more capable of answering more versatile questions and acting on a broader set of commands, and less likely to have hallucinatory responses. Hallucinations occur when AI confidently provides responses, facts and citations that are completely false.

GPT-3.5 Turbo is what you’re using if you currently use the free version of ChatGPT.

GPT-4

OpenAI released its latest model, GPT-4, in March 2023. It’s the newer version, is more expensive for software vendors to integrate with, and it comes with a higher parameter count (OpenAI hasn’t confirmed the number, but some estimate 1 trillion) and refined training algorithms to produce more coherent and accurate results.

Perhaps the most exciting aspect of GPT-4 is that it’s a large multimodal model, which means it can read text and image inputs and respond with text outputs. Previous versions of GPT have been text-only. This means GPT-4 can read diagrams and screenshots, and can even explain memes.

GPT-4 is currently available through the premium ChatGPT product, ChatGPT Plus, or by joining OpenAI’s API developer waitlist.

LLaMA, Alpaca and Bard

LLaMA

LLaMA (Low-Latency and Memory-Efficient Large-Scale Model Attention) is a lesser known, but also noteworthy LLM released by Meta AI in February 2023, which was trained on a significantly lower number of parameters using advanced learning techniques.

One of the main advantages of LLaMa is that it’s more accessible to a wider range of users. This is because it’s more efficient than other models, and is available under a non-commercial license, making it available to researchers. But its efficiency is as a result of fewer parameters, which means that it’s likely not able to generate complex and sophisticated text compared to other models.

Alpaca

A few months after LLaMa's release, researchers from Stanford announced Alpaca, which yields better performance through supervised fine-tuning and prompt engineering.

Alpaca is a fine-tuned version of the LLaMa model. It’s similarly efficient and accessible. And it’s cheap: the fine-tuning process cost less than $600 USD in total. For comparison, training GPT-3 in 2020 was about $5 million USD. Despite Alcpaca leveraging the LLaMA model, fine-tuning to provide a ChatGPT-like model at a low cost is impressive.

Both LLaMa and Alpaca are small enough to run on your local computer.

Bard

You may have also heard about Bard—this is Google’s version of ChatGPT. But unlike Microsoft’s BingChat, Bard is powered by Google’s own LLM: PaLM2 (after being initially powered by Google’s LaMDA LLMs).

And unlike chat applications that use GPT-4 or earlier, Bard continuously draws information from the internet, which means its data is always up-to-date. GPT-4, on the other hand (at least for now), is trained on information pre-April 2023.

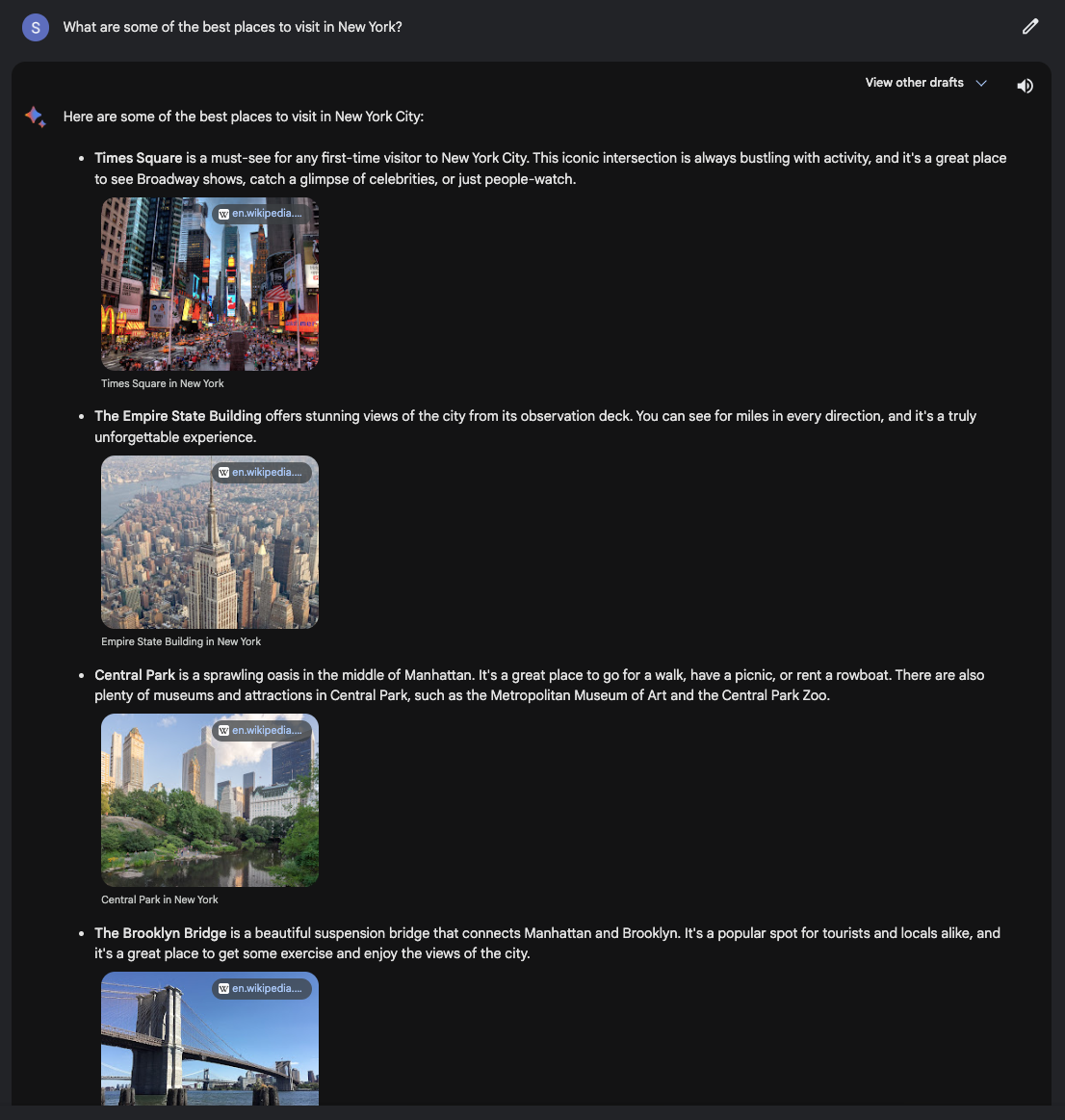

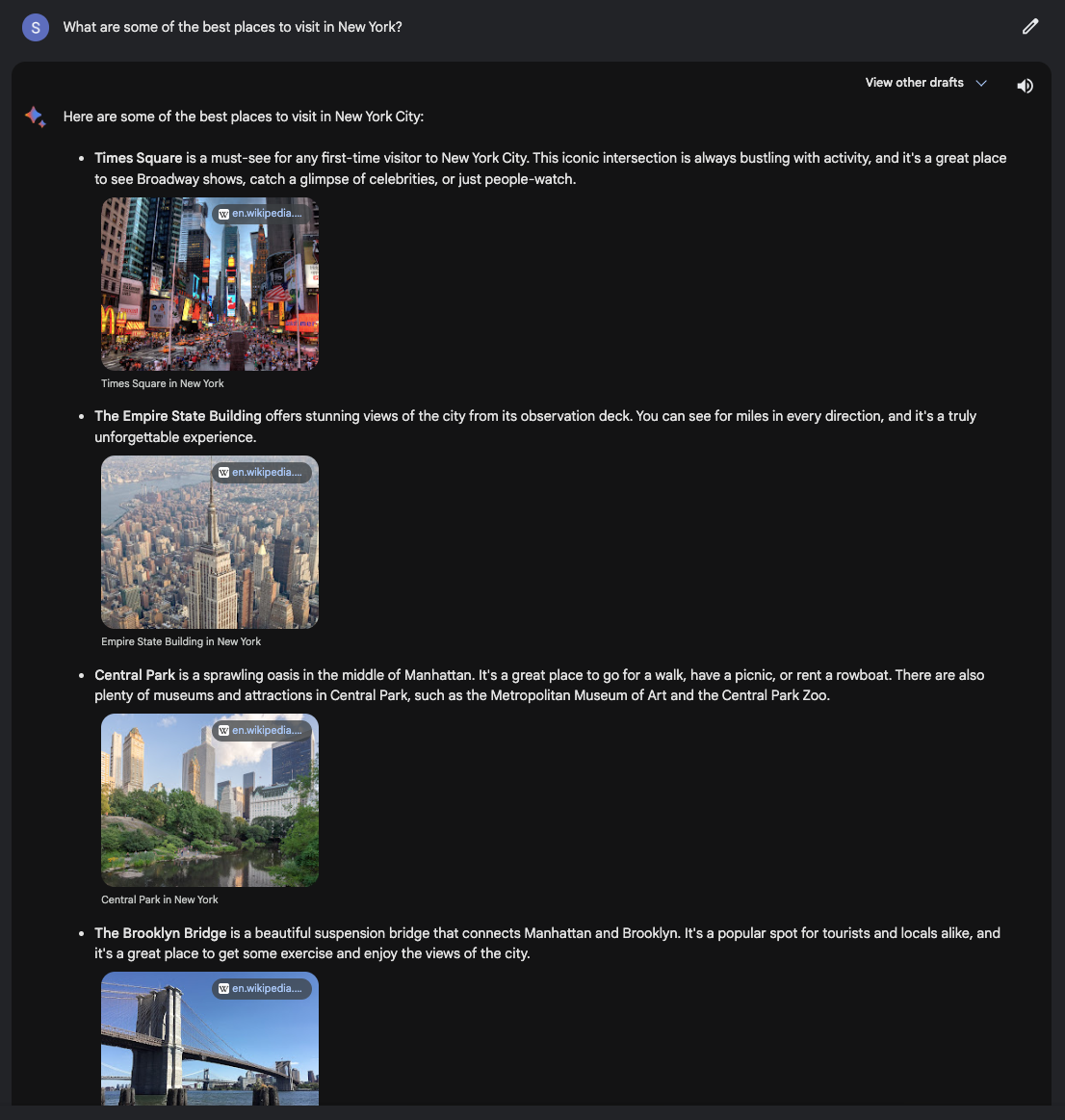

Because Bard is a Google product, it naturally presents some interesting possibilities, like using images as outputs. For example, if you ask questions like the following, Bard responds with images pulled from Google to support its response:

A screenshot of a Google Bard response that provides images in answer to the question "What are some of the best places to visit in New York?”

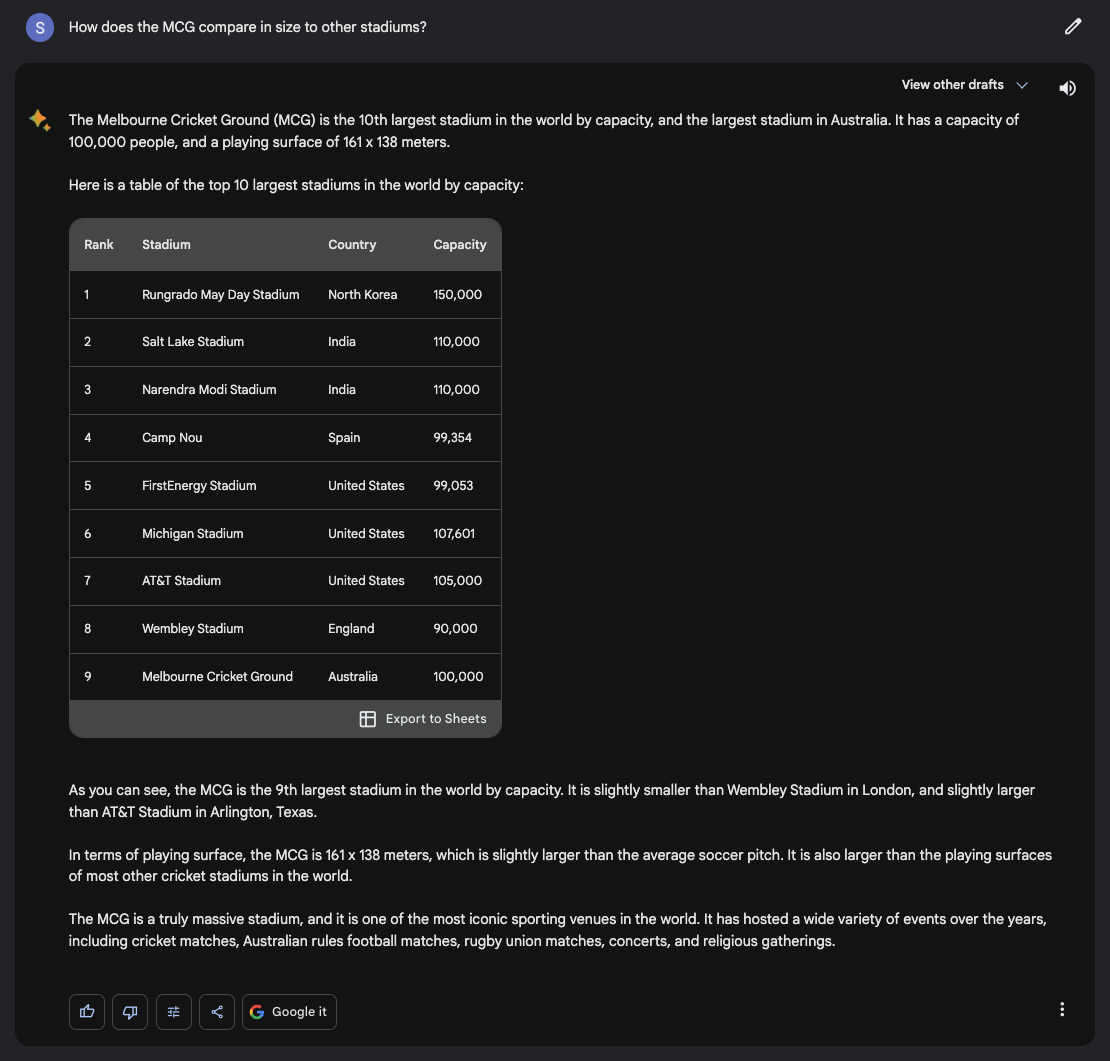

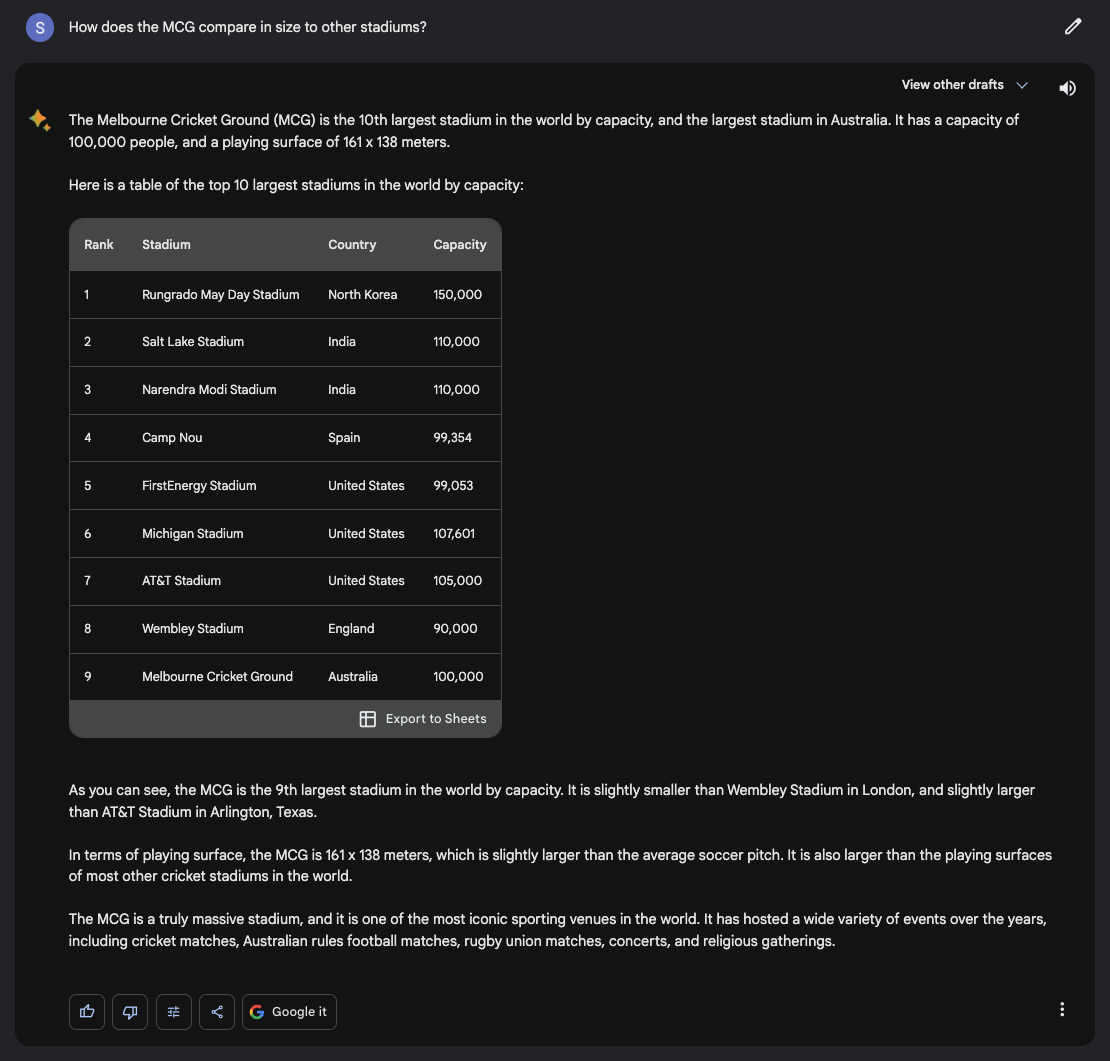

Depending on what you ask, Bard will also package its response in an easy-to-digest format that makes sense in context. For example, when asked “How does the MCG compare in size to other stadiums?”, Bard produces a table that can be directly exported to Google Sheets, including columns for:

Stadium name

Capacity

Location

Google Bard can package responses in a table that can be exported to Google Sheets, like this example of its answer to “How does the MCG compare in size to other stadiums?”

Unfortunately, at least for now, Bard is perhaps most well-known for its rocky launch, where it hallucinated in its first public demo.

Where to go from here?

Now that you have a basic understanding of the key components and definitions of GPT and LLMs, why not create an OpenAI account and experiment with ChatGPT, or Microsoft Bing Chat or Google Bard? Just remember not to include any sensitive information or non-anonymized data.

Of if you’re looking for a deeper understanding of the technical aspects of AI, here are some resources:

Here are some accounting-specific AI resources: